Reading Length: 8 Minutes

Key Words: Game Theory, Military Strategy, Political Science

The 2014 blockbuster movie The Imitation Game reenacts the story of how the British cracked the German encryption cipher named the Enigma during World War II. Not only was the Enigma cracked, but also a handful of other encryption devices that fell under the umbrella of the British project codenamed Ultra.

The British cracked German ciphers relatively early in the war. By 1942, they could choose to act on intercepted intelligence to strike at targets. If they chose this route of action, however, they could tip their hand by inadvertently revealing to the Germans that their codes were compromised.

A solution to this problem was to act on Ultra decoded information, but create the false impression that the intelligence was gained by other means:

In order to preserve the security of Ultra, they decided not to attack an enemy ship on the basis of Ultra alone. Instead, an aircraft had to be sent to the ship to make a visual report so that the enemy would believe that aerial reconnaissance rather than code breaking was responsible for sinking the ship.1

This is a paradigmatic dilemma of the art of surprise. When information or a new device, a ‘secret weapon’, has been developed without the counterparty’s awareness, there is a tradeoff between exploiting a surprise early or waiting for a more advantageous opportunity but risking its premature discovery. Axelrod provides another example, this time from the Yom Kippur War:

A good example of how it can pay to wait is presented by the Syrian decision not to use its new SAM air defense system in September 1973. Knowing that they would be attacking Israel less than a month later, the Syrians withheld the use of their new SAM system when a major air battle started over Syrian skies and then extended over the Mediterranean. Withholding the use of the SAMs cost Syria twelve planes to Israel's one, but it preserved the secrecy of the effectiveness of the system. The effectiveness of the SAMs in Egypt as well as in Syria was one of the major surprises the Israelis encountered in the opening days of the 1973 war.

Although these examples are from the field of war, the structural problem extends to more mundane scenarios. In business, an enterprise may have a competitive advantage unbeknownst to the broader market. In sports, an athlete could have an innovative technique they can spring on their competitor. In litigation, a lawyer could gain possession of a damning piece of evidence midway through the trial that did not turn up in the discovery phase. What do these scenarios have in common?

All these examples present the same structural problem in determining whether to wait or whether to exploit the resource immediately. In all of them, the stakes vary over time: there may be an even more important situation later than there is now. Likewise, in all of them, there is a cost in maintaining the resource. Also, in all of the cases, the use of the resource risks its future value. Finally, in all cases, there are several reasons why one would prefer a given payoff at once rather than wait until a future opportunity.

In the spirit of game theory, Axelrod takes these features of surprise and distills them into a simple gambling game:

The gambling game illustrates the basic problem in the rational timing of surprise. At each point in time there is a conflict between the desire to exploit the resource immediately for its potential gain, and a competing desire to pay the necessary cost to maintain the resource in the hope that an even better occasion for its use will come along soon

This resembles the famous ‘stopping problem’ in mathematics and economics, that has also been called the ‘secretary problem’ and is foundational for search theorists.

Now that the dilemma has been abstracted away into a simple dice game, it is trivial to formalize it mathematically after introducing the key variables.

This equation captures the expected probabilities (P & S), the chance the the resource will be used up when exploited (Q), a discount rate (D), and the gain from exploitation (E), and the objective is to maximize V.

Of course, in the real world any estimation of these probabilities is going to be mere guess-work. Axelrod recognizes this obvious limitation of the quantitative analysis:

In practice, quantitative measurements of variables, such as the chance that the resource will survive if used, are difficult to estimate with any great degree of confidence.

Although any realistic quantitative specification is out of reach, a formalization of the dilemma can still bring out useful insights:

Fortunately, a quantitative estimate is not always needed. There are a number of important policy implications that rely only on the qualitative assessment of the parameters.

Some of these implications that fall out of the formula are patently obvious. For example, when the odds that the resource will be destroyed are higher, the threshold to wait to use the resource should be higher. Some, however, are less obvious:

Patience is a virtue. When rare events have very high stakes, the best strategy may be to wait for these rare events before exploiting a resource for surprise. As we have seen, even if the resource has a good chance of survival when used, and even if there is some chance that it will be destroyed when not used, the distribution of stakes may be such that it still pays to wait for the very rare but important event.

It is easy to plug in values and see that in most cases, if there is the chance of a ‘very rare but important event occurring’, it will be worth it to wait, even if the event only happens 1% of the time.

The virtue of patience raises problems for democracies and other forms of organization with limited tenures, such as CEOs:

Since the optimal strategy will often require quite long waits, the bureaucratic incentives may not actually reflect the national interest. A bureaucrat or president may not have a very great probability of being in the same job when a sufficiently important event comes along. Therefore, he has an incentive to exploit the resource prematurely, since he is likely to care more about how his own performance looks than about how his successor or his successor's successor looks. The implication is that those who have control over the use of resources for a surprise should be rewarded for passing on a good inventory of undestroyed resources, as well as for the successful use of such resources.

Another consequence of this optimality is that a sort of Bayesian reasoning can lead one astray when observing a counterparty during peacetime. If a counterparty is waiting for an extremely rare event to act on an advantageous resource, then it would be incorrect to assume that with every successive ‘turn’ or day the counterparty has no advantage. ‘Updating your priors’ with each new observation would lead to the exactly wrong conclusion:

For example, a rule of inference about the other side’s behavior which has worked in a series of low-stake situations may not work when the stakes are greater, precisely because the other side may have been waiting to exploit a standard operating procedure as a resource for surprise.

Finally, following the logic, if parties are waiting for rare events to act on surprises, then these rare events will be times of incredible unpredictability:

This leads to the fundamental principle that when stakes get very large, a great deal of surprise can be expected. Indeed, this may be one of the primary reasons why nations so often are overconfident about their ability to predict the actions of their potential opponents. They simply do not fully take into account the fact that being able to predict when the stakes are low does not provide a good reason to believe that prediction will be good when the stakes are high. Thus, knowing that the other side is predictable when the stakes are low should not be very comforting.

Thankfully, this unpredictability can act as a deterrence for large-stake conflicts. If parties are indeed saving their surprises for rare all-out warfare, then everyone involved should be highly hesitant to engage in such events to begin with. Contrary to the doctrine of mutually-assured destruction (MAD), it is precisely because destruction is not assured that deterrence is enforced.

Finally, it follows that as observation technology increases, the chance of being duped counterintuitively increases:

The more an unsophisticated side can observe, the more readily it can be deceived. The reason is simply that the more a side can observe, the more things can be presented as patterns of behavior in order to build up a false sense of confidence in the ability to predict. Thus, the presence of ever more sophisticated photographic and electronic reconnaissance devices may simply allow the observed side to obtain more and more resources for surprise

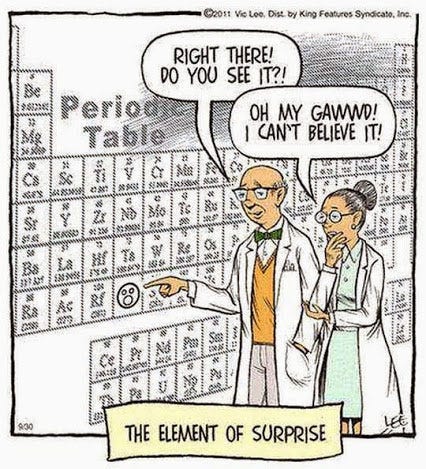

Through extensive surveillance, people can be lulled into a sense of complacency after learning of the others’ standard operating procedures. But this complacency is exactly what allows for the element of surprise to be inflicted.

Axelrod’s framework makes clear how bureaucratic constraints such as term limits and human folly can impede rational-choice models. The aim of formulating this dilemma in a mathematical model is to not create exact precision, but to tease out how strategic rationality can break down in reality and suggest pathways for ameliorating these constraints.

In his book Stragetic Interaction, the eminent sociologist Erving Goffman considers the limitations of the game theoretic perspective. Using the ‘game’ of espionage between countries as an example, Goffman analyzes what happens when there is the possibility of a ‘false double-agent’:

A classic example of the degeneration of expression is found in the turning of intelligence agents. When, say, the British discover that one of their diplomats is a Russian spy and imprison him for forty-two years, and then five years later he escapes, what are the Russians to think? Is he their man and the information he gave them reliable? Was he all along a double agent feeding them false information and then imprisoned briefly to give false assurances that he had not been working for the British? Was he loyal to Russia but discovered by the British and, unbeknownst to himself, given false information to feed the Russians? Has he been allowed to escape so that the Russians would wrongly think that he had really been working for the British and therefore that his information had been false? And the British themselves, to know what import the Russians gave to the spy’s information, must know whether indeed the Russians think their man was really their man, and if so, whether or not this had been known by the start by the British.

This confusing analysis, the ‘degeneration of expression’, shows how complicated the situation can become - especially when the secret weapon is an individual person.

While the above situation might sound fantastical and unlikely, Goffman cites a book called The Secrets of D-Day by Perrault in which such a situation actually occured. The Allies caught a French colonel that was serving as a spy to the Germans. The Allies turned the spy to a double agent, and fed him useless information so the Germans would know that the spy was serving as a double agent and being fed false information. The Allies then gave the spy the real date of the D-Day invasion, so the Germans would believe it was false information as well. After D-Day occured, the Germans were now fooled into believing that the French colonel was in fact not a double agent and was being fed real information, so they began trusting him.2

Clearly we are far outside the realm of Axelrod’s simple equation. Goffman closes his book, unsurprisingly, by making space for the sociological perspective:

The applicability of the gaming framework to relationships and gatherings, and its great value in helping to formulate a model of the actor who relates and who foregathers, has led to conceptualizations which, too quickly, intermingle matters which must be kept apart, at least initially. Social relationships and social gatherings are two separate and distinct substantive areas; strategic interaction is an analytical perspective which illuminates both but coincides with neither.

Axelrod, ‘The Rational Timing of Surprise’, 1979. Axelrod cites two books for this anecdote, Bodyguard of Lies and Very Special Intelligence in footnote 8.

Note that the French colonel is not a triple agent, which would be analytically more simple. The colonel is a double agent, because they have turned from the Germans to the Allies. They are false, because they are being fed real information to deceive the Germans that the information is ‘false’, which afterwards restores their confidence in the ‘false’ agent.